Prerequisites

- ✓ A Hugging Face account with an access token

- ✓ A Google account for Google Colab access

- ✓ Basic Python knowledge (helpful but not required)

- ✓ Accept the MedGemma model license on Hugging Face

Hugging Face Setup

1

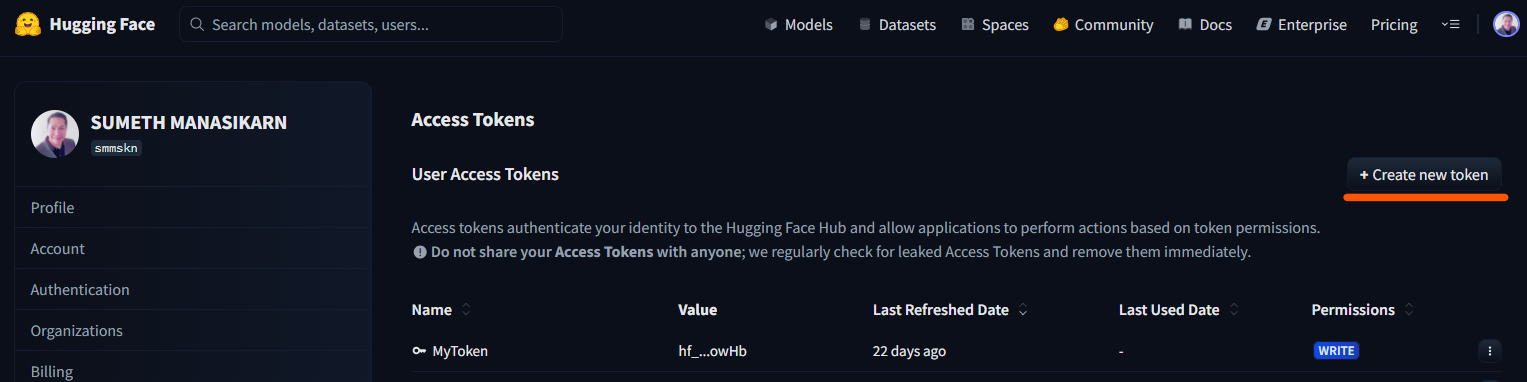

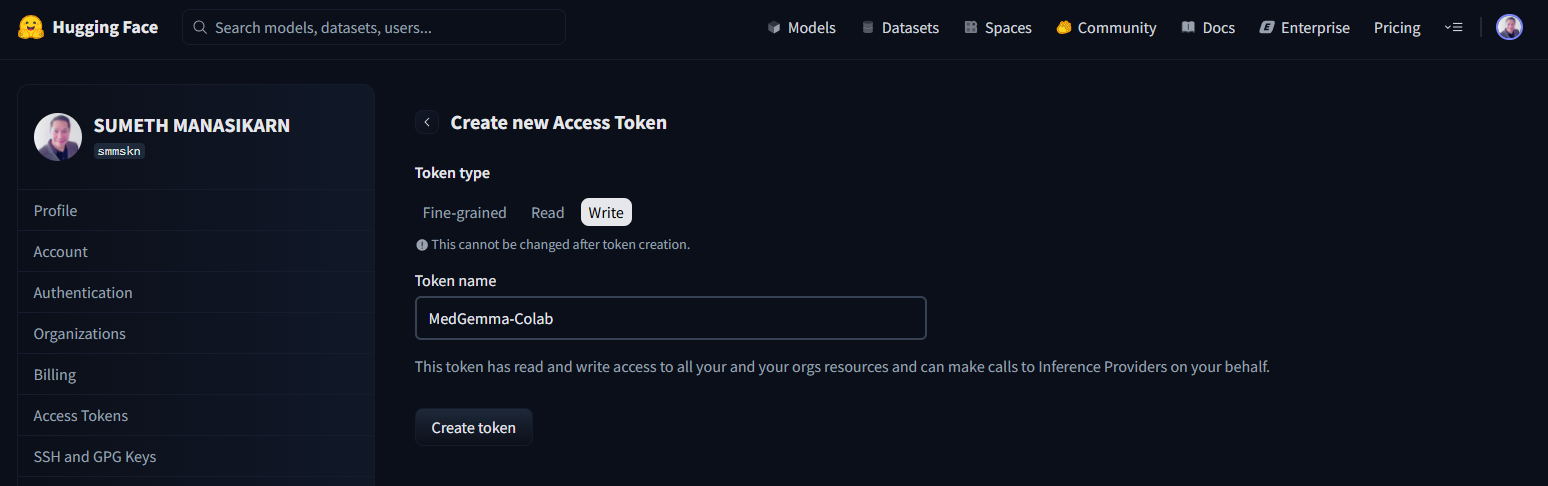

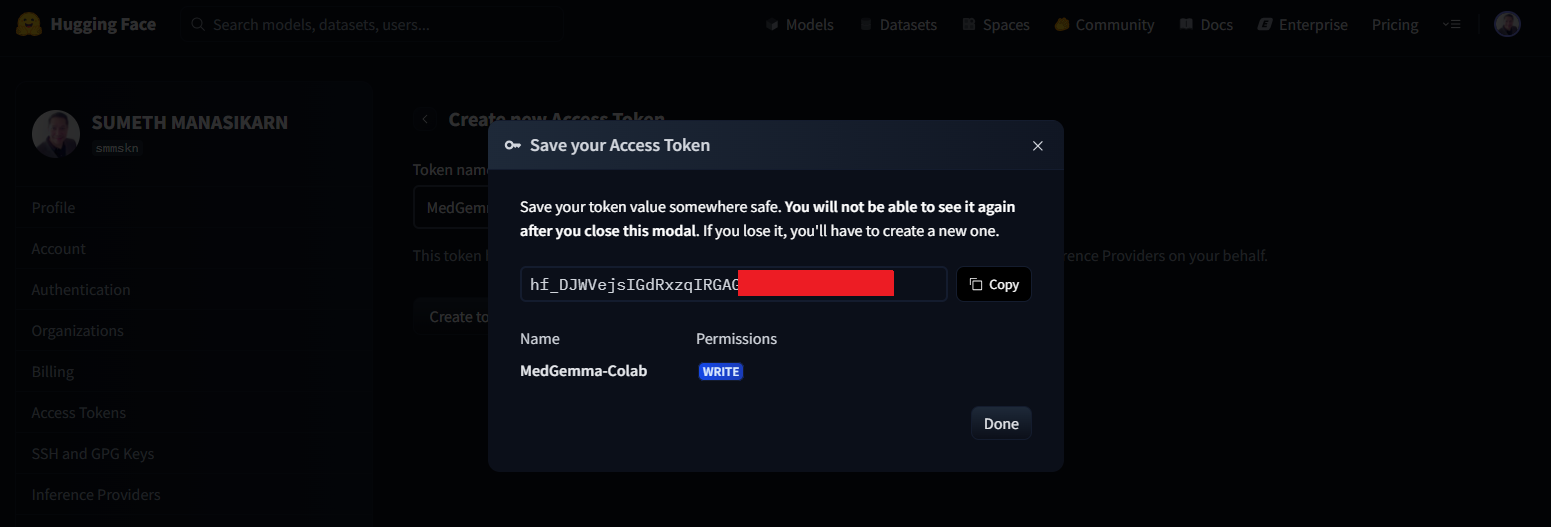

Create a Hugging Face Access Token

Generate an authentication token to access gated models

Navigate to your Hugging Face account settings to create a new access token:

- 1. Click on your profile icon in the top-right corner

- 2. Select Access Tokens from the dropdown menu

- 3. Click the "+ Create new token" button

Tip: Access tokens authenticate your identity and allow applications to perform actions on your behalf. Never share your tokens publicly.

Google Colab Setup

3

Enable GPU Runtime

Configure Colab to use T4 GPU for model inference

MedGemma 4B requires GPU acceleration. In Google Colab:

- Go to Runtime → Change runtime type

- Select T4 GPU (or higher) as the hardware accelerator

- Click Save

Free Tier: T4 GPU is available on Google Colab's free tier, making MedGemma accessible without any cost.