Reading Time: 12 min ⏱️

I. Introduction: The Agentic Shift

If 2024 was the year we learned to talk to AI, 2026 is the year AI learns to work for us.

For the last two years, the industry has obsessed over RAG (Retrieval Augmented Generation) and chat interfaces. But let's be honest—a chatbot that only summarizes text is just a fancy search engine. The real ROI lies in Agentic AI: systems that can reason, use tools, execute workflows, and correct their own mistakes.

Enter the Google Agent Development Kit (ADK)

Google ADK represents a significant and meaningful advancement in the field of agent development—it provides a well-organized and thoughtfully structured framework, delivers reliable and consistent performance across different use cases, and offers comprehensive monitoring and debugging capabilities that make it straightforward to understand what your agents are doing at every step. These characteristics combined make it an ideal and highly suitable choice for production deployment in professional business environments where stability, transparency, and maintainability are critical requirements.

In this deep dive, we're moving past the buzzwords. We'll examine the architecture of a modern agent, explore the killer features of ADK, and build a Financial "Deep Research" Analyst from scratch—complete with a flight-control dashboard. Finally, we'll cover the often-ignored topic of Day 2 Operations: deploying these agents securely to Google Cloud.

II. What is Google ADK? (Beyond the Hype)

Google ADK is an open-source, code-first framework available in Python, TypeScript, and Go. It is designed to solve the three hardest problems in AI development: State Management, Tool Orchestration, and Observability.

Unlike a standard API call to Gemini or GPT-4, an ADK Agent acts as a persistent entity. It possesses:

Memory: It remembers context across a long-running session.

Tooling: It has "hands" to interact with APIs, databases, and files.

Metacognition: It can critique its own plan before executing it.

The 3 Features That Change the Game

Bi-Directional Streaming (Multimodal): ADK supports real-time audio and video streams. This enables use cases like a customer service voice bot that can be interrupted naturally.

Generative UI: Agents allow rendering of React components—charts, interactive tables, and forms—turning a conversation into a dashboard.

The Agent2Agent (A2A) Protocol: The standardized way for agents to collaborate (detailed below).

Sponsored by Attio for The CRM that scales your business.

Introducing the first AI-native CRM

Connect your email, and you’ll instantly get a CRM with enriched customer insights and a platform that grows with your business.

With AI at the core, Attio lets you:

Prospect and route leads with research agents

Get real-time insights during customer calls

Build powerful automations for your complex workflows

Join industry leaders like Granola, Taskrabbit, Flatfile and more.

Sponsored by Attio for The CRM that scales your business.

III. Deep Dive: The Agent2Agent (A2A) Protocol

One of the biggest friction points in 2024 was getting different AI models to talk to each other. How does a Python-based "Data Scientist" agent share memory with a Java-based "Backend" agent?

Google ADK introduces the A2A Protocol, a standardized communication layer that acts like TCP/IP for agents. It moves beyond simple text prompts and enforces a strict Contract-Based Handshake.

How the Handshake Works

When Agent A (The Manager) delegates a task to Agent B (The Worker), it doesn't just say "Do this." It transmits a structured payload containing:

The Goal: The specific objective.

The Constraints: Budget, time limits, or forbidden tools.

The Context Snapshot: A compressed vector summary of the conversation so far, so Agent B doesn't start from zero.

The JSON Structure

Under the hood, an ADK inter-agent message looks like this:

{

"protocol": "A2A_v2",

"sender": "manager_agent_01",

"recipient": "researcher_agent_04",

"payload": {

"task_id": "8823-xf92",

"instruction": "Analyze risk factors for ticker NVDA.",

"context_vector": "eJ12... (compressed memory embedding)",

"constraints": {

"max_cost_usd": 0.50,

"forbidden_tools": ["public_internet_search"],

"required_format": "json_schema_v1"

}

},

"callback_webhook": "https://api.internal/agent/callback"

}Why This Matters

This protocol lets you use different types of AI models together. Your "Manager" can be a powerful Gemini 3 Pro model running on Vertex AI. At the same time, your "Worker" agents can be smaller, less expensive Llama-3 models running on your own servers. As long as they can communicate using A2A, they can work together. This approach helps you save money while keeping your system smart and effective.

IV. Hands-On: Building the "Deep Research" Finance Analyst

Let’s leave the theory behind. We are going to build an agent that doesn't just chat, but actively reads a PDF 10-K filing, cites specific page numbers, and generates an investment memo.

To emulate the production standard, we will not write a messy single script. We will build the "Eyes, Brain, Face" Architecture using the official Google ADK structure.

The Tech Stack:

Model: Gemini 3 Pro (Preview)

Framework: Google ADK (Python SDK)

Interface: ADK Native Web (The Face)

Tools: PyPDF (The Eyes)

Implementation Preview: Your Deep Research Finance Analyst

Step 1: The "Eyes" (Defining the Tool — tools.py)

First, we handle the data processing separately. An LLM (Large Language Model) cannot "see" a PDF; we must convert it into text. Importantly, we insert Page Markers so the agent can cite its sources later.

File: finance_analyst/tools.py

Step 2: The "Brain" (agent.py)

Now we initialize the agent with a specific persona and mandatory citation rules. Notice the system_instruction—this is critical for ensuring the agent behaves like a professional analyst, not a creative writer.

File: finance_analyst/agent.py

Step 3: The "Face" (Native Flight Control)

Previously, creating a simple chat interface required writing 50 lines of complex code. Google ADK eliminates this work entirely.

Instead of writing a custom app.py, we use the built-in Agent Runtime to launch a production-grade debug dashboard instantly.

# Launch the native developer UI using Google ADK

adk web finance_analystWhat just happened? With a single command, you launched a "Flight Control" dashboard that goes far beyond a simple chat interface:

The X-Ray Trace: On the left panel, you don't just see the answer; you see the cognitive steps. You can watch the exact moment the agent decides to call

parse_earnings_report.

State Inspection: You can view the raw JSON state of the agent's memory, allowing you to debug exactly what context was retained or lost.

Zero Boilerplate: We went from "Concept" to "Interactive UI" without writing a single line of frontend code.

The Result: Navigate to http://localhost:8000. You will see your Finance Analyst ready to work. Simply ask it to "Analyze the risks in the Nvidia PDF" and watch it analyze the document in real time.

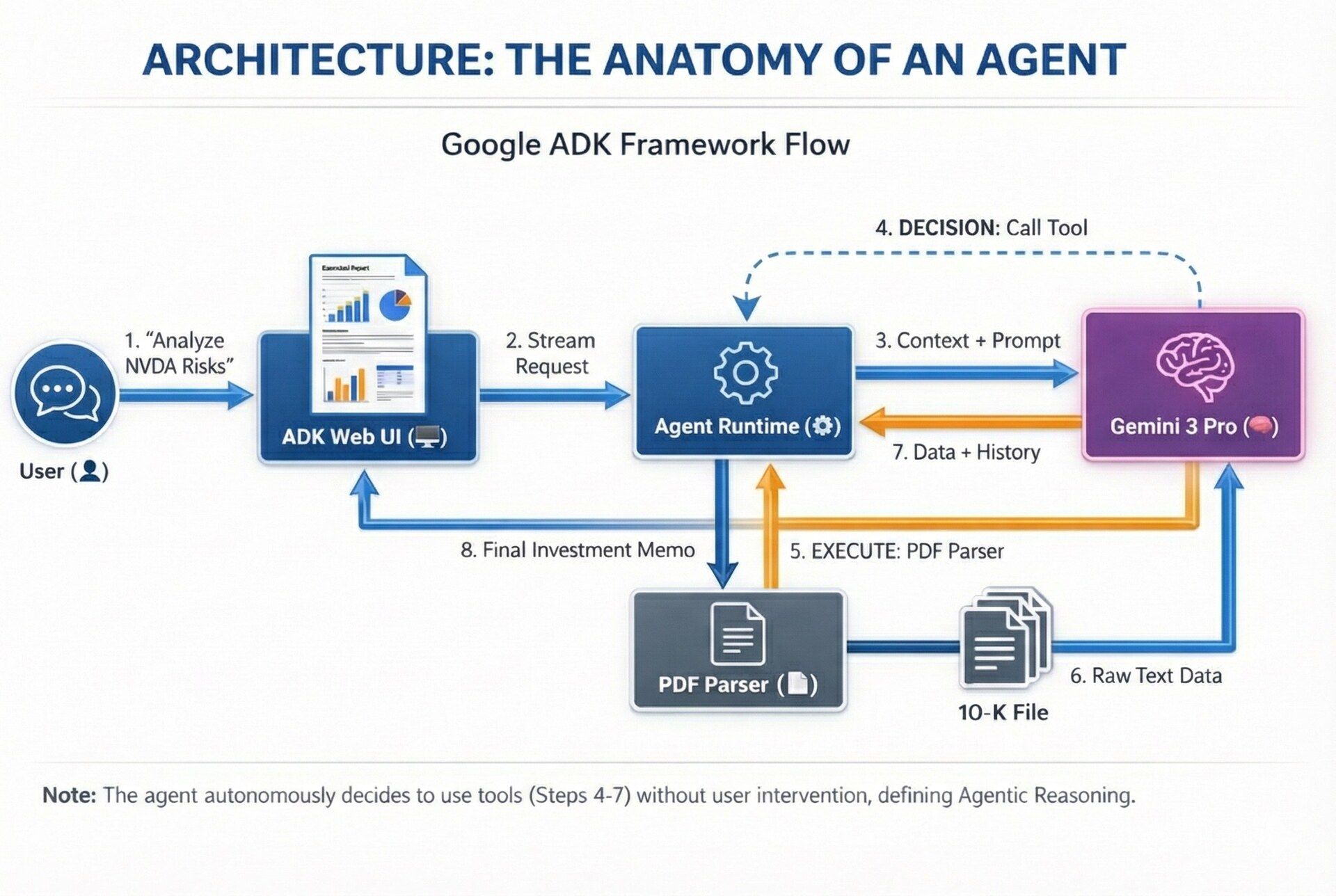

V. Architecture: The Anatomy of an Agent

When you run the code above, you aren't just running a script; you are spinning up a complex orchestration layer. Here is how the data flows.

Key Takeaway: Notice steps 4, 5, and 6. The User never sees this. The Agent decides it needs to read the file, pauses generation, executes the Python function, ingests the data, and then continues. This is the definition of Agentic Reasoning.

VI. Day 2 Operations: Deploying to Google Cloud

A major critique of early agent demos was that they only worked on localhost. How do you take the Finance Analyst we just built and scale it to handle 10,000 requests?

ADK is built with "Cloud Native" principles by default. It includes a CLI that bridges the gap between Python scripts and Docker containers.

1. Containerization

You don't need to write a Dockerfile manually. The ADK CLI analyzes your imports and generates an optimized container image.

# In your terminal

adk build --target=docker --optimizeThis creates a distroless image pre-packaged with the ADK runtime, stripping out unnecessary dev dependencies to keep the image size under 500MB (crucial for fast cold starts).

2. Deploying to Cloud Run

We use Google Cloud Run for serverless execution. This ensures you only pay when the agent is actually working.

gcloud run deploy finance-analyst-agent \

--image gcr.io/my-project/finance-agent:v1 \

--allow-unauthenticated \

--set-env-vars GOOGLE_API_KEY=secret_manager:latest3. Security: Workload Identity

Never hardcode API keys in production. ADK integrates natively with Google Service Accounts. Instead of passing an API key, you assign a Service Account to your Cloud Run instance.

ADK detects the environment automatically:

# Production Code (No API Keys needed)

if os.environ.get("ENV") == "production":

# ADK automatically authenticates using the machine's identity

adk.auth.use_default_credentials()This "Zero Trust" approach is why enterprises prefer ADK over hobbyist frameworks. It ensures that your agent only has permission to access the specific buckets and databases it needs, and nothing else.

VII. Real-World Use Cases

Beyond finance, where is this being used in 2026?

1. The "Self-Healing" DevOps Bot

Scenario: A server crashes at 3 AM.

Agent Workflow: The agent receives the alert (PagerDuty), queries the logs (Splunk Tool), identifies a memory leak, drafts a fix (GitHub Tool), and asks a Senior Engineer for approval.

Benefit: Reduces Mean Time to Recovery (MTTR) from hours to minutes.

2. Legal Contract Review

Scenario: Reviewing 500 vendor contracts for compliance.

Agent Workflow: A "Reader Agent" extracts clauses, a "Lawyer Agent" compares them against the new 2026 AI Regulation Act, and a "Writer Agent" flags violations.

Benefit: Scales legal review without scaling headcount.

VIII. Conclusion: The Code is the Easy Part

The code we wrote today is simple. The challenge—and the opportunity—lies in Orchestration.

Google ADK provides the blueprint. The question is: What will you build with it?

Where to Go Next

For further exploration, consider these helpful resources: